Kathryn M. O’Neill / MIT Technology Review; Photos by Ryan Hoover / UW ECE News

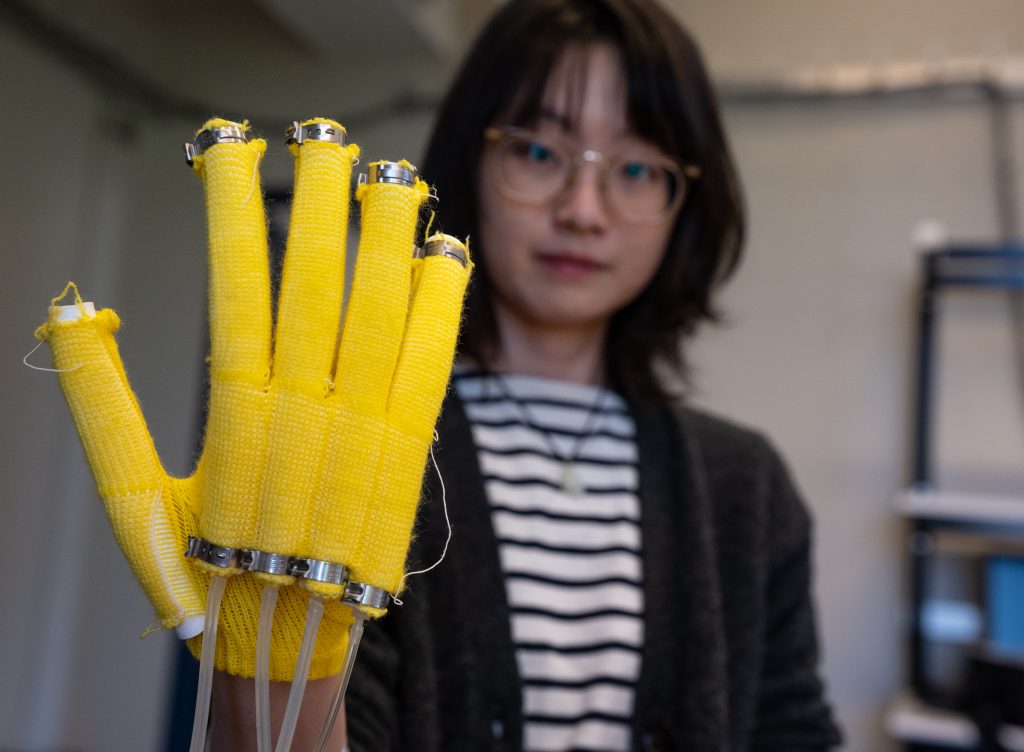

UW ECE assistant professor Yiyue Luo

Physical tasks such as hitting a ball or drawing blood are difficult to learn simply by listening to instructions or reading descriptions. That’s one reason UW ECE assistant professor Yiyue Luo is developing clothing that can sense where a person is, know what movement is needed to perform a task, and provide physical cues to guide performance.

“In a conventional learning scenario—say, learning tennis—the coach would hold your hand and let you feel how to grasp the racket. This physical interaction is very important,” says Luo. “What we have been doing is to capture, model, and augment such physical interaction.”

Luo, who was named to the 2024 Forbes list of 30 innovators under 30, has already developed a posture-sensing carpet and a smart glove that can capture and relay touch-based instructions. Her goal is to gather information on how experts perform physical tasks and create wearables that can help people move the same way—by providing a nudge, for example.

Luo demonstrates a digitally machine-knitted assistive glove, designed to support hand movement.

Raised in Guangzhou, China, Luo earned her bachelor’s degree in materials science and engineering from the University of Illinois Urbana-Champaign, where she was introduced to the field of bioelectronics by one of its pioneers, Professor John A. Rogers. She then switched to electrical engineering for graduate school, earning her PhD at MIT with a dissertation on smart textiles—those with sensors, actuation capabilities, and the means to capture data. She now foresees developing smart textiles equipped with information about how to move—something like ChatGPT but for physical information. Gathering data about movement is the vital first step.

“I think patients and people in the health-care domain can potentially get huge benefits.” — UW ECE assistant professor Yiyue Luo

(Top left): samples from the UW ECE Wearable Intelligence Lab, showcasing digitally-knitted fabrics integrated with both customized and commercial fibers for multimodal sensing and actuation; (top right): a digital design program used for generating the knitting pattern; (bottom left): close-up of the knitting machine’s individually actuated needles, which enable complex yarn manipulation via the yarn carrier; (bottom right): a knitted sleeve featuring integrated conductive electrodes for multimodal sensing. The sleeve can be worn like a conventional garment, offering a soft, conformal fit.

ChatGPT can answer text-based inquiries because it has been trained on enormous caches of text-based data—all initially captured through such technologies as a keyboard and mouse, Luo explains. But, she says, “for a lot of physical information [for example, the pressure, speed, and orientation of an action], such input-output interfaces are still missing.”

Those interfaces are what Luo is working to develop, with an eye toward advancing robotics and improving human-robot interactions. Her hope is that the work will one day improve health care—by guiding patients through physical therapy exercises, perhaps, or by sensing and changing the position of an immobile patient to prevent bedsores.

“I think patients and people in the health-care domain can potentially get huge benefits,” she says.

The industrial-scale Shima Seiki digital knitting machine at the Digital Fabrication Lab at UW, capable of combining standard and conductive yarns.

Reprinted with permission of Slice of MIT.